Advanced Prompting

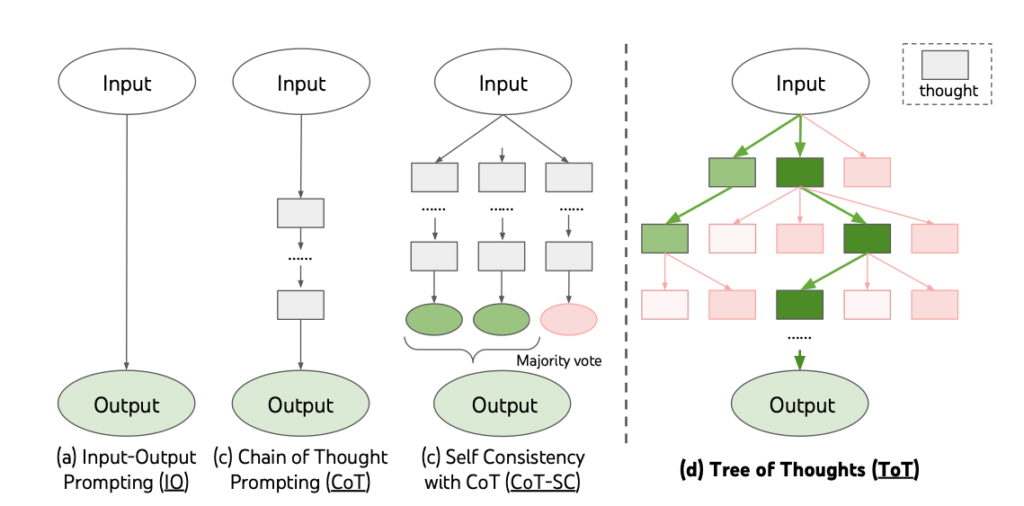

This paper investigates the impact of five basic yet versatile prompting strategies—zero-shot, few-shot, chain of thought, and one advanced method, Tree of Thought (ToT)—on two key datasets: Game 24 and Crossword. Our research reveals that while the state-of-the-art ToT strategy is effective on its original LLM, it does not adapt well to smaller models like Vicuna-7b. A detailed examination of ToT’s performance on these benchmark datasets uncovers two major limitations, which we address with our novel method. Our approach not only successfully resolves issues where ToT typically struggles, but it also operates up to5 times faster. Additionally, we enhance the Vicuna-7b model’s capabilities by integrating an image-to-text conversion tool, adding multi-modal functionality to the model.